Experts have claimed that well-liked AI picture mills similar to Stable Diffusion usually are not so adept at selecting up on gender and cultural biases when utilizing machine studying algorithms to create artwork.

Many text-to-art mills can help you enter phrases and draft up a novel picture on the opposite finish. However, these mills can typically be based mostly on stereotypical biases, which may have an effect on how machine studying fashions manufacture photographs Images can typically be Westernized, or present favor to sure genders or races, relying on the forms of phrases used, Gizmodo famous.

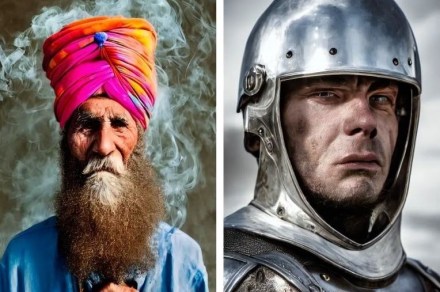

What's the distinction between these two teams of individuals? Well, in accordance with Stable Diffusion, the primary group represents an 'formidable CEO' and the second a 'supportive CEO'.

I made a easy device to discover biases ingrained on this mannequin: https://t.co/l4lqt7rTQj pic.twitter.com/xYKA8w3N8N— Dr. Sasha Luccioni 💻🌎✨ (@SashaMTL) October 31, 2022

Sasha Luccioni, synthetic intelligence researcher for Hugging Face, created a device that demonstrates how the AI bias in text-to-art mills works in motion. Using the Stable Diffusion Explorer for example, inputting the phrase “ambitious CEO” garnered outcomes for several types of males, whereas the phrase “supportive CEO” gave outcomes that confirmed each women and men.

Similarly, the DALL-E 2 generator, which was created by the model OpenAI has proven male-centric biases for the time period “builder” and female-centric biases for the time period “flight attendant” in picture outcomes, regardless of there being feminine builders and male flight attendants.

While many AI picture mills seem to only take a number of phrases and machine studying and out pops a picture, there’s much more that goes on within the background. Stable Diffusion, for instance, makes use of the LAION picture set, which hosts “billions of pictures, photos, and more scraped from the internet, including image-hosting and art sites,” Gizmodo famous.

Racial and cultural bias in on-line picture searches has already been an ongoing subject lengthy earlier than the growing reputation of AI picture mills. Luccioni advised the publication that methods, such because the LAION dataset ,are prone to house in on 90% of the pictures associated to a immediate and use it for the picture generator.

Editors’ Recommendations