[ad_1]

The creation of 3D objects for constructing scenes for video games, digital worlds together with the metaverse, product design or visible results is historically a meticulous course of, the place expert artists stability element and photorealism towards deadlines and price range pressures.

It takes a very long time to make one thing that appears and acts as it will within the bodily world. And the issue will get more durable when a number of objects and characters must work together in a digital world. Simulating physics turns into simply as essential as simulating gentle. A robotic in a digital manufacturing unit, for instance, must haven’t solely the identical look, but in addition the identical weight capability and braking functionality as its bodily counterpart.

It’s laborious. But the alternatives are big, affecting trillion-dollar industries as diverse as transportation, healthcare, telecommunications and leisure, along with product design. Ultimately, extra content material might be created within the digital world than within the bodily one.

To simplify and shorten this course of, NVIDIA as we speak launched new analysis and a broad suite of instruments that apply the facility of neural graphics to the creation and animation of 3D objects and worlds.

These SDKs — together with NeuralVDB, a ground-breaking replace to trade commonplace OpenVDB,and Kaolin Wisp, a Pytorch library establishing a framework for neural fields analysis — ease the inventive course of for designers whereas making it simple for tens of millions of customers who aren’t design professionals to create 3D content material.

Neural graphics is a brand new discipline intertwining AI and graphics to create an accelerated graphics pipeline that learns from knowledge. Integrating AI enhances outcomes, helps automate design decisions and gives new, but to be imagined alternatives for artists and creators. Neural graphics will redefine how digital worlds are created, simulated and skilled by customers.

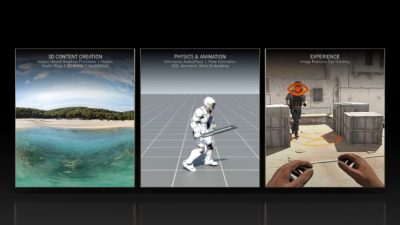

These SDKs and analysis contribute to every stage of the content material creation pipeline, together with:

3D Content Creation

- Kaolin Wisp – an addition to Kaolin, a PyTorch library enabling sooner 3D deep studying analysis by lowering the time wanted to check and implement new strategies from weeks to days. Kaolin Wisp is a research-oriented library for neural fields, establishing a typical suite of instruments and a framework to speed up new analysis in neural fields.

- Instant Neural Graphics Primitives – a brand new strategy to capturing the form of real-world objects, and the inspiration behind NVIDIA Instant NeRF, an inverse rendering mannequin that turns a group of nonetheless pictures right into a digital 3D scene. This method and related GitHub code speed up the method by as much as 1,000x.

- 3D MoMa – a brand new inverse rendering pipeline that permits customers to shortly import a 2D object right into a graphics engine to create a 3D object that may be modified with real looking supplies, lighting and physics.

- GauGAN360 – the subsequent evolution of NVIDIA GauGAN, an AI mannequin that turns tough doodles into photorealistic masterpieces. GauGAN360 generates 8K, 360-degree panoramas that may be ported into Omniverse scenes.

- Omniverse Avatar Cloud Engine (ACE) – a brand new assortment of cloud APIs, microservices and instruments to create, customise and deploy digital human functions. ACE is constructed on NVIDIA’s Unified Compute Framework, permitting builders to seamlessly combine core NVIDIA AI applied sciences into their avatar functions.

Physics and Animation

- NeuralVDB – a groundbreaking enchancment on OpenVDB, the present trade commonplace for volumetric knowledge storage. Using machine studying, NeuralVDB introduces compact neural representations, dramatically lowering reminiscence footprint to permit for higher-resolution 3D knowledge.

- Omniverse Audio2Face – an AI know-how that generates expressive facial animation from a single audio supply. It’s helpful for interactive real-time functions and as a standard facial animation authoring device.

- ASE: Animation Skills Embedding – an strategy enabling bodily simulated characters to behave in a extra responsive and life-like method in unfamiliar conditions. It makes use of deep studying to show characters how to answer new duties and actions.

- TAO Toolkit – a framework to allow customers to create an correct, high-performance pose estimation mannequin, which may consider what an individual is likely to be doing in a scene utilizing pc imaginative and prescient far more shortly than present strategies.

Experience

- Image Features Eye Tracking – a analysis mannequin linking the standard of pixel rendering to a person’s response time. By predicting one of the best mixture of rendering high quality, show properties and viewing situations for the least latency, It will permit for higher efficiency in fast-paced, interactive pc graphics functions equivalent to aggressive gaming.

- Holographic Glasses for Virtual Reality – a collaboration with Stanford University on a brand new VR glasses design that delivers full-color 3D holographic pictures in a groundbreaking 2.5-mm-thick optical stack.

Join NVIDIA at SIGGRAPH to see extra of the newest analysis and know-how breakthroughs in graphics, AI and digital worlds. Check out the newest improvements from NVIDIA Research, and entry the total suite of NVIDIA’s SDKs, instruments and libraries.

[ad_2]