[ad_1]

Recommender techniques, the financial engines of the web, are getting a brand new turbocharger: the NVIDIA Grace Hopper Superchip.

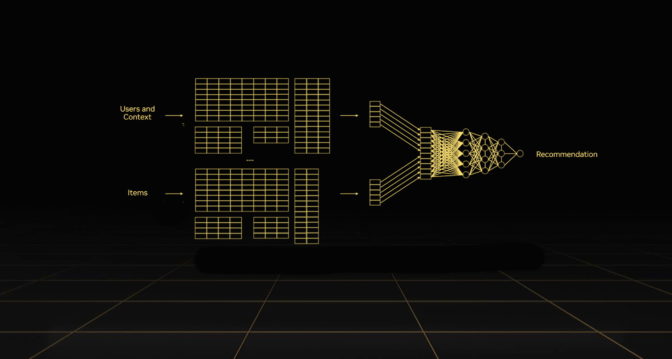

Every day, recommenders serve up trillions of search outcomes, adverts, merchandise, music and information tales to billions of individuals. They’re among the many most vital AI fashions of our time as a result of they’re extremely efficient at discovering within the web’s pandemonium the pearls customers need.

These machine studying pipelines run on information, terabytes of it. The extra information recommenders eat, the extra correct their outcomes and the extra return on funding they ship.

To course of this information tsunami, corporations are already adopting accelerated computing to personalize providers for his or her clients. Grace Hopper will take their advances to the following stage.

GPUs Drive 16% More Engagement

Pinterest, the image-sharing social media firm, was capable of transfer to 100x bigger recommender fashions by adopting NVIDIA GPUs. That elevated engagement by 16% for its greater than 400 million customers.

“Normally, we would be happy with a 2% increase, and 16% is just a beginning,” a software program engineer on the firm stated in a current weblog. “We see additional gains — it opens a lot of doors for opportunities.”

The subsequent era of the NVIDIA AI platform guarantees even larger features for corporations processing large datasets with super-sized recommender fashions.

Because information is the gasoline of AI, Grace Hopper is designed to pump extra information by means of recommender techniques than some other processor on the planet.

NVLink Accelerates Grace Hopper

Grace Hopper achieves this as a result of it’s a superchip — two chips in a single unit, sharing a superfast chip-to-chip interconnect. It’s an Arm-based NVIDIA Grace CPU and a Hopper GPU that talk over NVIDIA NVLink-C2C.

What’s extra, NVLink additionally connects many superchips into an excellent system, a computing cluster constructed to run terabyte-class recommender techniques.

NVLink carries information at a whopping 900 gigabytes per second — 7x the bandwidth of PCIe Gen 5, the interconnect most vanguard upcoming techniques will use.

That means Grace Hopper feeds recommenders 7x extra of the embeddings — information tables filled with context — that they should personalize outcomes for customers.

More Memory, Greater Efficiency

The Grace CPU makes use of LPDDR5X, a sort of reminiscence that strikes the optimum steadiness of bandwidth, power effectivity, capability and value for recommender techniques and different demanding workloads. It offers 50% extra bandwidth whereas utilizing an eighth of the facility per gigabyte of conventional DDR5 reminiscence subsystems.

Any Hopper GPU in a cluster can entry Grace’s reminiscence over NVLink. It’s a function of Grace Hopper that gives the biggest swimming pools of GPU reminiscence ever.

In addition, NVLink-C2C requires simply 1.3 picojoules per bit transferred, giving it greater than 5x the power effectivity of PCIe Gen 5.

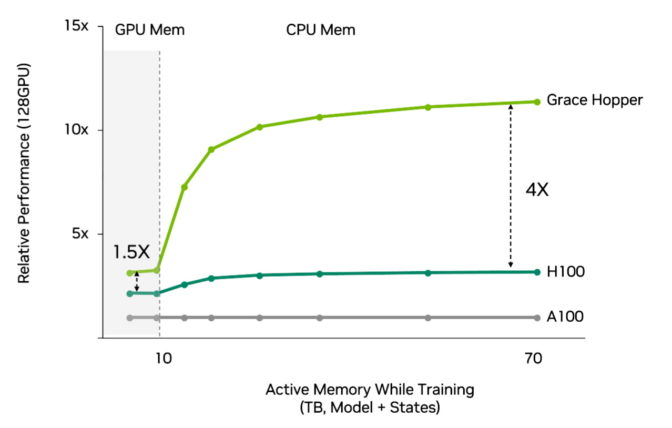

The general result’s recommenders get an additional as much as 4x extra efficiency and larger effectivity utilizing Grace Hopper than utilizing Hopper with conventional CPUs (see chart under).

All the Software You Need

The Grace Hopper Superchip runs the total stack of NVIDIA AI software program utilized in a few of the world’s largest recommender techniques at this time.

NVIDIA Merlin is the rocket gasoline of recommenders, a group of fashions, strategies and libraries for constructing AI techniques that may present higher predictions and enhance clicks.

NVIDIA Merlin HugeCTR, a recommender framework, helps customers course of large datasets quick throughout distributed GPU clusters with assist from the NVIDIA Collective Communications Library.

Learn extra about Grace Hopper and NVLink on this technical weblog. Watch this GTC session to be taught extra about constructing recommender techniques.

You also can hear NVIDIA CEO and co-founder Jensen Huang present perspective on recommenders right here or watch the total GTC keynote under.

[ad_2]