[ad_1]

In 4 talks over two days, senior NVIDIA engineers will describe improvements in accelerated computing for contemporary information facilities and methods on the edge of the community.

Speaking at a digital Hot Chips occasion, an annual gathering of processor and system architects, they’ll disclose efficiency numbers and different technical particulars for NVIDIA’s first server CPU, the Hopper GPU, the newest model of the NVSwitch interconnect chip and the NVIDIA Jetson Orin system on module (SoM).

The displays present contemporary insights on how the NVIDIA platform will hit new ranges of efficiency, effectivity, scale and safety.

Specifically, the talks exhibit a design philosophy of innovating throughout the total stack of chips, methods and software program the place GPUs, CPUs and DPUs act as peer processors. Together they create a platform that’s already working AI, information analytics and excessive efficiency computing jobs inside cloud service suppliers, supercomputing facilities, company information facilities and autonomous methods.

Inside NVIDIA’s First Server CPU

Data facilities require versatile clusters of CPUs, GPUs and different accelerators sharing large swimming pools of reminiscence to ship the energy-efficient efficiency right now’s workloads demand.

To meet that want, Jonathon Evans, a distinguished engineer and 15-year veteran at NVIDIA, will describe the NVIDIA NVLink-C2C. It connects CPUs and GPUs at 900 gigabytes per second with 5x the power effectivity of the prevailing PCIe Gen 5 normal, due to information transfers that devour simply 1.3 picojoules per bit.

NVLink-C2C connects two CPU chips to create the NVIDIA Grace CPU with 144 Arm Neoverse cores. It’s a processor constructed to unravel the world’s largest computing issues.

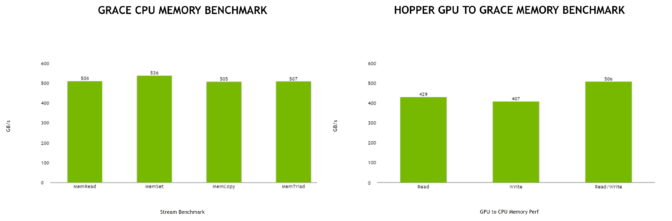

For most effectivity, the Grace CPU makes use of LPDDR5X reminiscence. It permits a terabyte per second of reminiscence bandwidth whereas protecting energy consumption for all the complicated to 500 watts.

One Link, Many Uses

NVLink-C2C additionally hyperlinks Grace CPU and Hopper GPU chips as memory-sharing friends within the NVIDIA Grace Hopper Superchip, delivering most acceleration for performance-hungry jobs akin to AI coaching.

Anyone can construct customized chiplets utilizing NVLink-C2C to coherently hook up with NVIDIA GPUs, CPUs, DPUs and SoCs, increasing this new class of built-in merchandise. The interconnect will assist AMBA CHI and CXL protocols utilized by Arm and x86 processors, respectively.

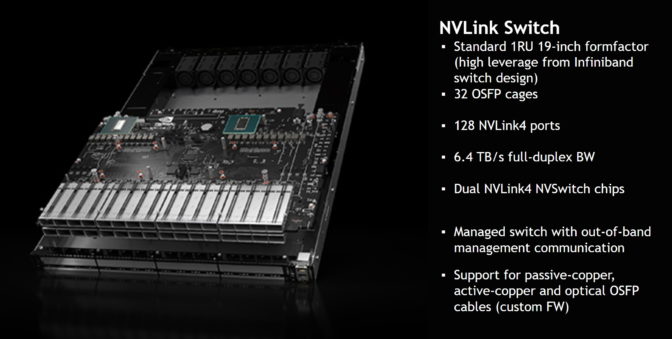

To scale on the system stage, the brand new NVIDIA NVSwitch connects a number of servers into one AI supercomputer. It makes use of NVLink, interconnects working at 900 gigabytes per second, greater than 7x the bandwidth of PCIe Gen 5.

NVSwitch lets customers hyperlink 32 NVIDIA DGX H100 methods into an AI supercomputer that delivers an exaflop of peak AI efficiency.

Alexander Ishii and Ryan Wells, each veteran NVIDIA engineers, will describe how the change lets customers construct methods with as much as 256 GPUs to sort out demanding workloads like coaching AI fashions which have greater than 1 trillion parameters.

The change contains engines that pace information transfers utilizing the NVIDIA Scalable Hierarchical Aggregation Reduction Protocol. SHARP is an in-network computing functionality that debuted on NVIDIA Quantum InfiniBand networks. It can double information throughput on communications-intensive AI purposes.

Jack Choquette, a senior distinguished engineer with 14 years on the firm, will present an in depth tour of the NVIDIA H100 Tensor Core GPU, aka Hopper.

In addition to utilizing the brand new interconnects to scale to unprecedented heights, it packs many superior options that increase the accelerator’s efficiency, effectivity and safety.

Hopper’s new Transformer Engine and upgraded Tensor Cores ship a 30x speedup in comparison with the prior technology on AI inference with the world’s largest neural community fashions. And it employs the world’s first HBM3 reminiscence system to ship a whopping 3 terabytes of reminiscence bandwidth, NVIDIA’s greatest generational improve ever.

Among different new options:

Choquette, one of many lead chip designers on the Nintendo64 console early in his profession, will even describe parallel computing methods underlying a few of Hopper’s advances.

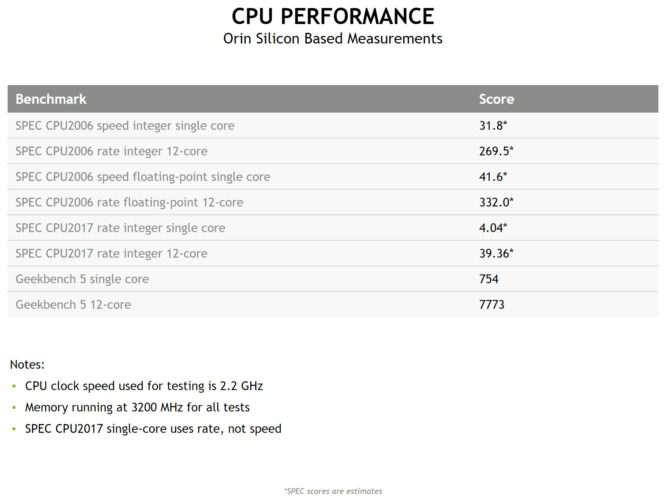

Michael Ditty, chief architect for Orin and a 17-year tenure on the firm, will present new efficiency specs for NVIDIA Jetson AGX Orin, an engine for edge AI, robotics and superior autonomous machines.

It integrates 12 Arm Cortex-A78 cores and an NVIDIA Ampere structure GPU to ship as much as 275 trillion operations per second on AI inference jobs. That’s as much as 8x larger efficiency at 2.3x larger power effectivity than the prior technology.

The newest manufacturing module packs as much as 32 gigabytes of reminiscence and is a part of a appropriate household that scales all the way down to pocket-sized 5W Jetson Nano developer kits.

All the brand new chips assist the NVIDIA software program stack that accelerates greater than 700 purposes and is utilized by 2.5 million builders.

Based on the CUDA programming mannequin, it contains dozens of NVIDIA SDKs for vertical markets like automotive (DRIVE) and healthcare (Clara), in addition to applied sciences akin to advice methods (Merlin) and conversational AI (Riva).

The NVIDIA AI platform is on the market from each main cloud service and system maker.

[ad_2]